Hands-On GPU Computing With Python: Explore The Capabilities Of GPUs For Solving High Performance Computational Problems | lagear.com.ar

3.1. Comparison of CPU/GPU time required to achieve SS by Python and... | Download Scientific Diagram

machine learning - How to make custom code in python utilize GPU while using Pytorch tensors and matrice functions - Stack Overflow

Estoy orgulloso administrar Recomendación python use gpu instead of cpu Espejismo Seminario El hotel

A Complete Introduction to GPU Programming With Practical Examples in CUDA and Python - Cherry Servers

Why is the Python code not implementing on GPU? Tensorflow-gpu, CUDA, CUDANN installed - Stack Overflow

Beyond CUDA: GPU Accelerated Python for Machine Learning on Cross-Vendor Graphics Cards Made Simple | by Alejandro Saucedo | Towards Data Science

Hands-On GPU Programming with Python and CUDA: Explore high-performance parallel computing with CUDA: 9781788993913: Computer Science Books @ Amazon.com

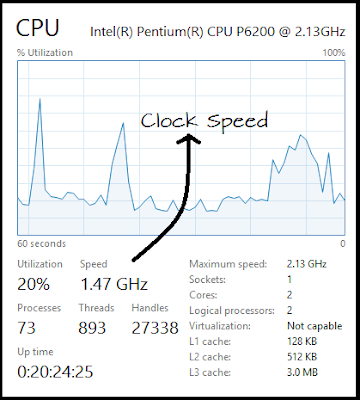

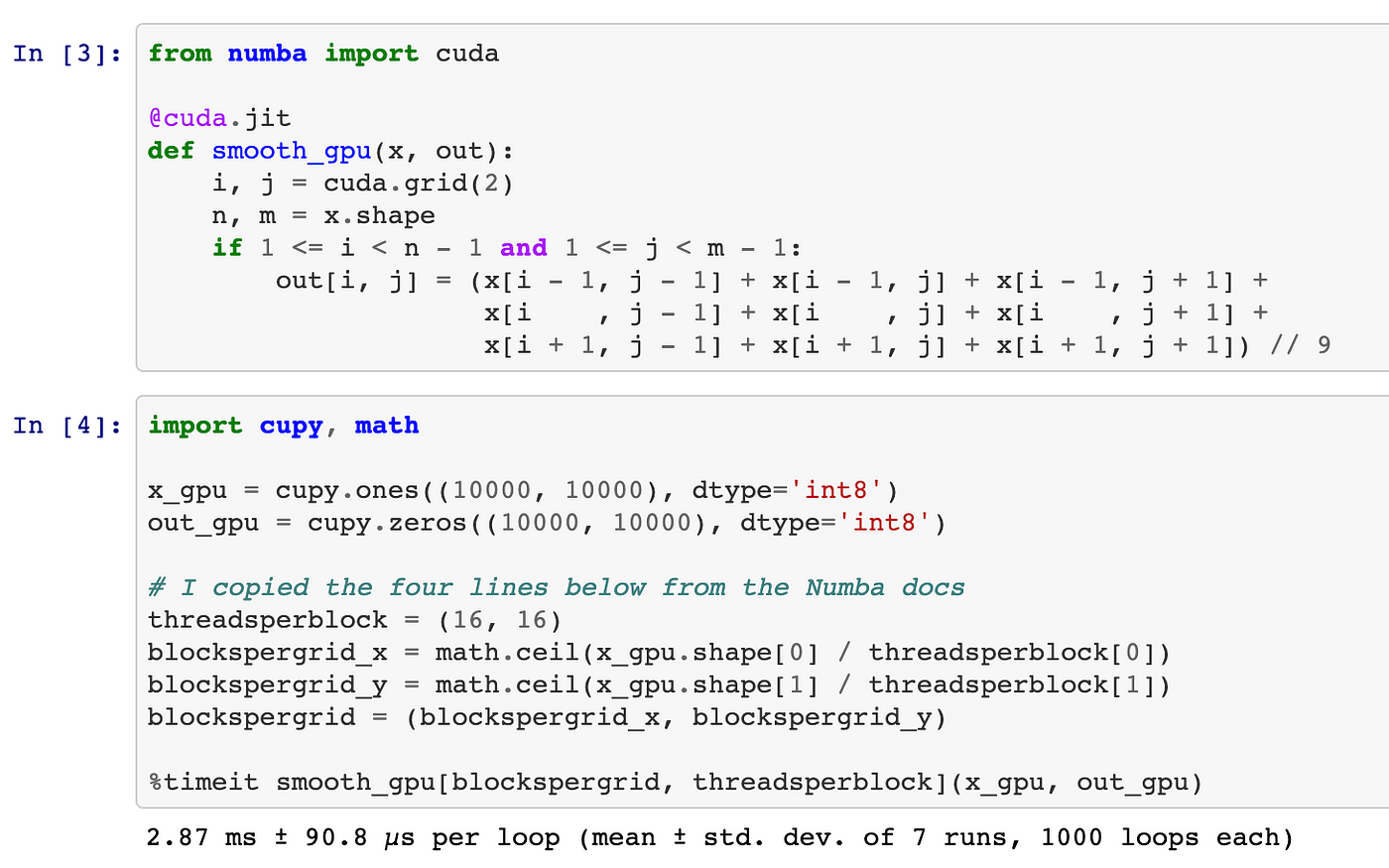

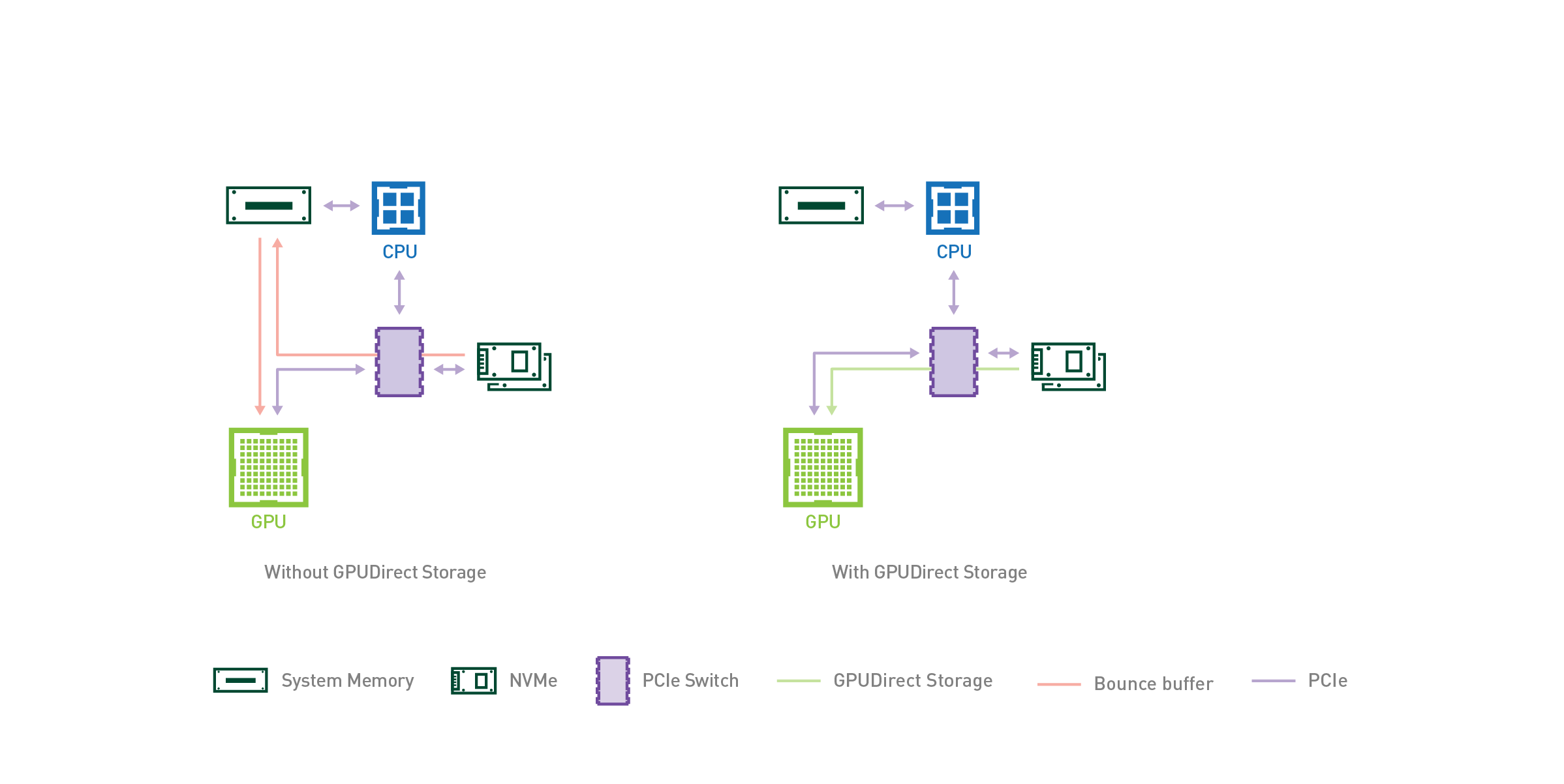

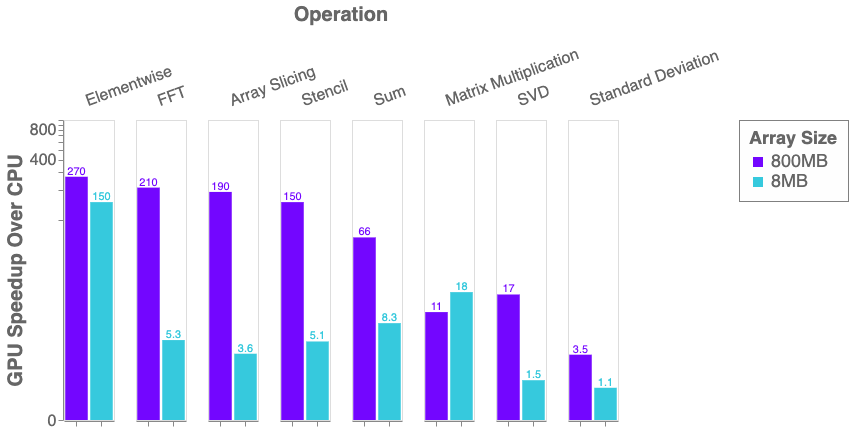

Python, Performance, and GPUs. A status update for using GPU… | by Matthew Rocklin | Towards Data Science

Estoy orgulloso administrar Recomendación python use gpu instead of cpu Espejismo Seminario El hotel

Using the Python Keras multi_gpu_model with LSTM / GRU to predict Timeseries data - Data Science Stack Exchange

Executing a Python Script on GPU Using CUDA and Numba in Windows 10 | by Nickson Joram | Geek Culture | Medium

Python, Performance, and GPUs. A status update for using GPU… | by Matthew Rocklin | Towards Data Science